By Josiah Hsiung, Principal, NightDragon and Alec Kiang, Associate, NightDragon

Foreword

Artificial Intelligence is making unprecedented inroads into the world of cybersecurity and ushering in a new wave of threats and potential safeguards. With new types of attacks that enterprises need to be prepared for, enterprises have raised questions about how to manage risk effectively and are looking for novel solutions to ensure the responsible usage of AI. This has resulted in significant innovation, advancement, and commercialization of ML model security tools that can help address data privacy issues, sensitive data leakage, and model robustness.

In a world where machine learning models are becoming the norm, security is essential. Until the regulatory landscape and AI governance space matures, enterprises are left to their own devices to be proactive and develop their own frameworks for securing ML models. New vendors continue to emerge and meet the demand for ML model security as AI applications continue to skyrocket – highlighting a new era in cybersecurity and machine learning.

Vijay Bolina, Chief Information Security Officer, Google DeepMind

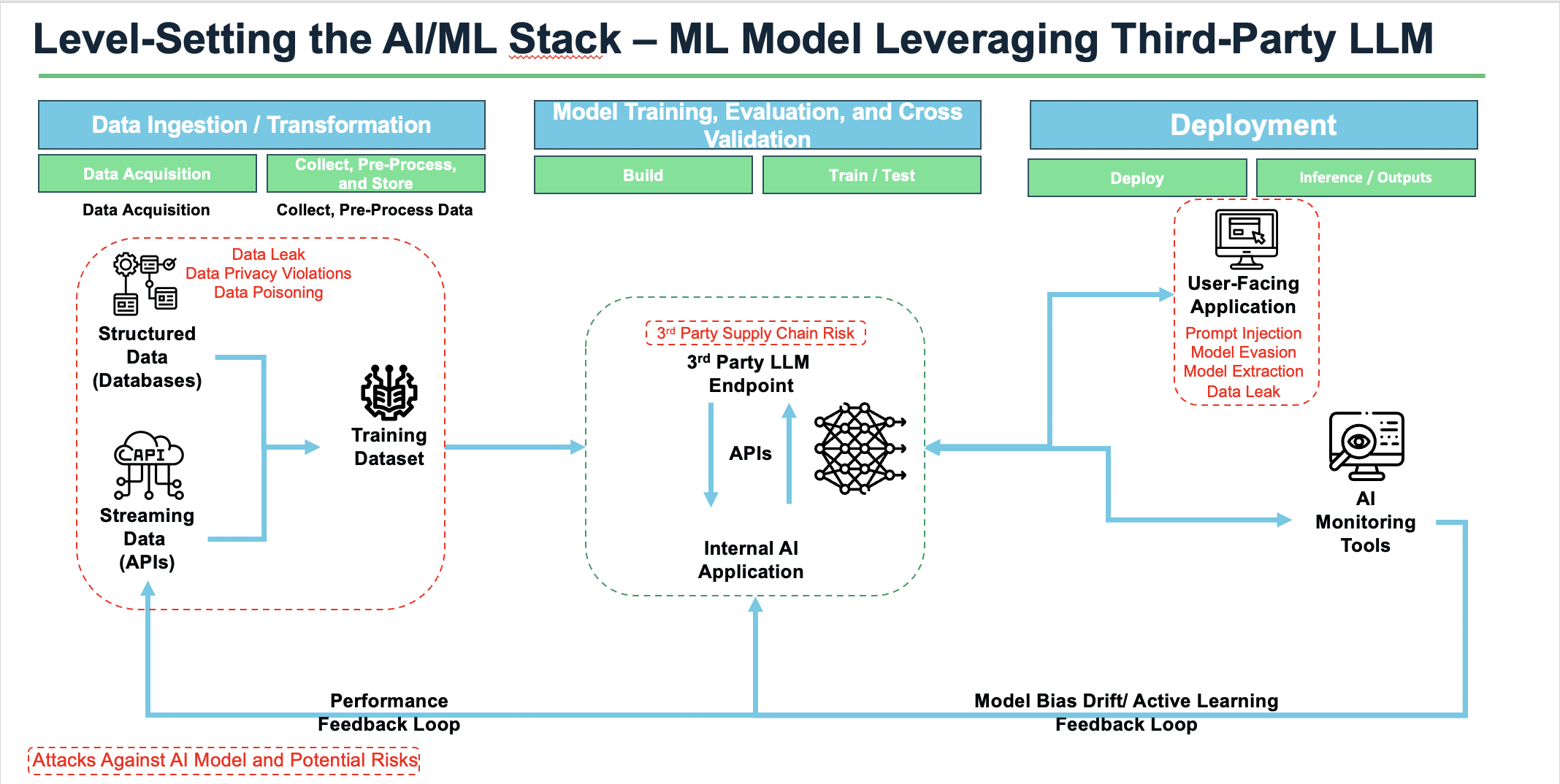

In the second blog of our AI blog series, we examine the machine learning development lifecycle to identify key areas where the ML model security technology stack comes into play. The ML model security market is ripe for innovation and far from saturation. We will inevitably start to see a rise in ML-specific attacks that will be driven by accelerated commercial adoption of these tools.

A New Wave of AI Security Innovation, Policies to Respond to Risk

“I think that there is a good market opportunity for startups and applications to protect AI models. The current discussion about AI and generative AI is rather about threats leveraging AI, and not about threats against AI. Security risks of AI and generative AI systems are not widely known yet, but I think this will change quickly in the near term”

Christoph Peylo, Chief Cyber Security Officer at Bosch

Driven by regulation, new adversarial attack vectors, and enterprise stakeholder risks, we believe that there will be accelerated commercialization of solutions that are focused on protecting ML models through their entire lifecycle. In order to secure ML models – enterprises must consider the attack surface throughout the development cycle to determine and prioritize areas that need to be secured.

Attacks against AI models and potential risks for AI models impact various stages across the ML development cycle – each of which requires different security protocols to address these potential risks. Existing cybersecurity solutions (e.g. identity and access management, data security, infrastructure security, software supply chain security, and threat intelligence capabilities) can support an enterprise’s ML security posture. However, current widely adopted solutions do not directly address ML model risks and have created a new market for ML security-specific vendors.

“Establishing a framework for data privacy, model robustness, and model explainability is crucial to support AI risk mitigation and ensure AI-enabled system reliability.”

Vijay Bolina, Chief Information Security Officer at Google DeepMind

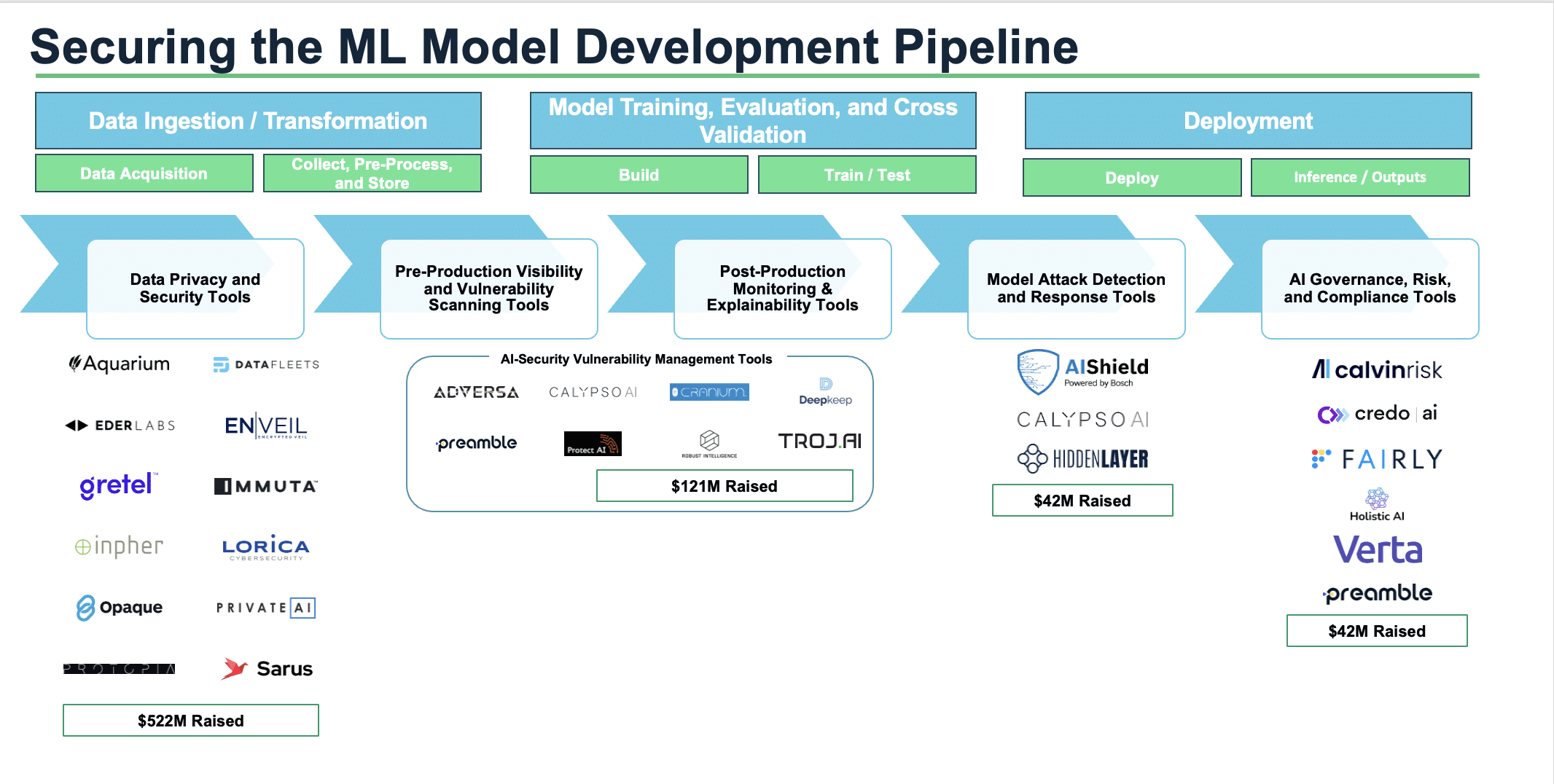

Based on the current landscape, ML security vendors can enhance enterprise AI security and safety through the following features:

- Training Data Security and Privacy Tools: Securing training data through (i) producing synthetic data to train ML models, (ii) transforming representations of training data used for ML models to reduce sensitive information exposure, and (iii) securing data sharing and access management (protect and encrypt data).

- Pre-Production Visibility and Vulnerability Scanning Tools: Stress-testing models to ensure “robustness” (ML model’s ability to resist being fooled) and as a precautionary tool to prevent AI risk early in the development cycle.

- Post-Production Monitoring Tools: Tracking post-production models to identify anomalies and model drift (degradation of model accuracy and performance). These tools also help address model explainability – understanding the rationale as to why ML models are outputting certain results.

- Model Attack Detection and Response Tools: ML model protection, intrusion detection, and threat intelligence feeds that allow for real-time detection and remediation of ML-based attacks (data poisoning, model evasion, model extraction).

- AI Governance, Risk, and Compliance Tools: Safety tools that ensure that developed models follow regulatory guidelines and enterprise-determined guardrails.

Vendors that enable ML security and responsible AI have raised a total of +$687M from VCs (Source: Pitchbook) which signals meaningful investment in a space that protects enterprises that lack the resources to defend themselves against the latest cybercrime schemes. While we have only included what we believe are vendors who are primarily focused on ML security in our market map, we recognize that other vendors may sell products that are tangentially related to ML security (e.g. other ML operations tools).

Regulating Artificial Intelligence – A Push for Additional Security Measures

In addition to commercial solutions, we are also seeing governments move to act. While still in its infancy, the EU, US government, and government agencies across the globe are starting to propose new regulations, guidelines, and research discussing frameworks for AI Security.

- FDA published an action plan to regulate AI-based Software as a Medical Device

- Algorithmic Accountability Act proposed in the House of Representatives and Senate stating that companies utilizing AI must conduct critical impact assessments of automated systems they sell and use in accordance with regulations set forth by the FTC

- EU AI Act – establishing regulatory frameworks and enhance governance / enforcement of existing law on fundamental rights and safety requirements applicable to AI systems

While governments around the world continue to explore ways to regulate generative AI, other leaders from Google DeepMind, Anthropic, and other AI labs have expressed their concerns through a Statement of AI Risk published on the Center for AI Safety’s website. The statement highlights wide-ranging concerns about the risk and dangers of AI are parallel to the dangers of pandemics and nuclear weapons.

NightDragon’s Thoughts and Predictions of the AI Security Market

- Enterprises that are currently adopting ML-specific security tools are taking a proactive approach. Many of the different types of attacks against ML models have only been demonstrated in academia. Until there have been tangible and reported instances of nation-states or hacker groups utilizing these attacks in practice, there will be a ramp time before there is massive commercial adoption of ML security tools.

- CISOs will prioritize securing training data first prior to implementing model security tools: Enterprises, especially in heavily regulated sectors (e.g. healthcare and financial services) will have to find ways to mitigate the potential risks of sensitive data leakage and poisoning.

- Enterprises will build more internally-hosted models in the long run rather than leveraging a third-party LLM: We are progressing to a multi-model world where enterprises will build both internally-hosted models and models that are leveraging third-party LLMs. However, based on our advisors’ feedback, we believe that enterprises will skew more toward building internally-hosted models. By training and managing their own models, enterprises can build models based on proprietary data to support workflows that are unique to them. As a result, we envision long-term adoption of pre-production vulnerability testing and post-production monitoring tools that will improve model accuracy and reduce model bias.

- Successful Model Attack Detection and Response Tools must be married with threat intelligence tools: Integration into threat intelligence and extended detection and response tools will be crucial to the timely detection, remediation, and response of ML-related attacks. When combined with threat intelligence, enterprises will be able to better anticipate ML attacks and ensure a strong ML model security foundation.

Closing Thoughts

The rapid advancement of generative AI, driven by the rise of OpenAI’s ChatGPT and the global AI arms race, presents both significant opportunities and challenges. Enterprises are embracing AI technologies at an unprecedented pace, recognizing their potential to revolutionize various industries/sectors. However, as AI becomes a strategic imperative, it is crucial to navigate the associated risks and ensure proper AI governance. The convergence of AI and cybersecurity demands increased vigilance, innovative solutions, and regulatory frameworks to protect against evolving threats and mitigate potential harm.

“Ultimately, you would want to protect the effort that you spent training the model and by implementing a security tool for your AI, you are protecting your business.”

Christoph Peylo, Chief Cyber Security Officer at Bosch

To keep track of new adversarial ML threats in addition to mitigation methodologies, we suggest that our readers follow the MITRE ATLAS website to keep track of new developments. Similar to how MITRE ATT&CK has served as a framework for threat intelligence, we believe that MITRE ATLAS will be a strong resource to protect against adversaries employing attacks against ML models.

NightDragon is committed to staying at the forefront of the AI security landscape, supporting and collaborating with founders developing cutting-edge security solutions to safeguard against adversarial ML threats. If you’re a founder in the generative AI security space, we’d love to hear from you! Email us at [email protected] or [email protected].

Read our previous blog examining the threats facing the AI ecosystem here.